18 December 2013

01 December 2013

Arcade Volleyball on the first date? Let's use Hamming Codes for protection...

|

| Even the world's worst Perl code has nothing on this. |

I used to be a computer-store-rat who would beg/borrow and occasionally purchase copies of Compute!'s Gazette (which, IIRC, was oriented towards the Commodore line of computers). In each month's exciting issue, there would be "source code" listings that you could type in so that you could end up with some computer utility or game. Typing in the source code involved typing in long streams of hexadecimal numbers from listings like this one.

I had no money to send away for a tape or a floppy disk with the corresponding binary code, so, if I wanted one of these utilities, I had to type in all of this stuff. There was a utility that would read in all of these numbers, line-by-line, and perform a simple check to make sure that the typist didn't make any typing errors.

This process was slightly less painful than slamming one's hand in a car-door a few times. I only managed to type all of these numbers in for a few programs that interested me. Luckily, I managed to satisfy my curiosity for programming computers in lots of other ways....

....

A former co-worker of mine (who is one of the best software engineers I have ever worked with) spent his early years with computers in a similar way to me. This gentleman has a really good sense of humor. I also have the impression that his personal life is more "Norman Rockwell" and not "Hunter S. Thompson". He's a fairly traditional guy.

This is all background to the following story, which amused me greatly: it seems that my former co-worker, during his teen-aged years, was both interested in computers and this certain young woman (who turned out to be his future wife). One of his very early dates with this young woman consisted of her reading strange letters/digits to him as he typed into his first computer. My former co-worker related all of these facts to us one day while we were in the lunch room at work. And then....somebody in the room considered all of this and asked my co-worker "so....let me see if I understand this correctly....are you telling us that you had hex with your future wife on the first date?".

The room exploded in (good-natured) laughter....

18 October 2013

REAMDE comes to life

Taken from this story:

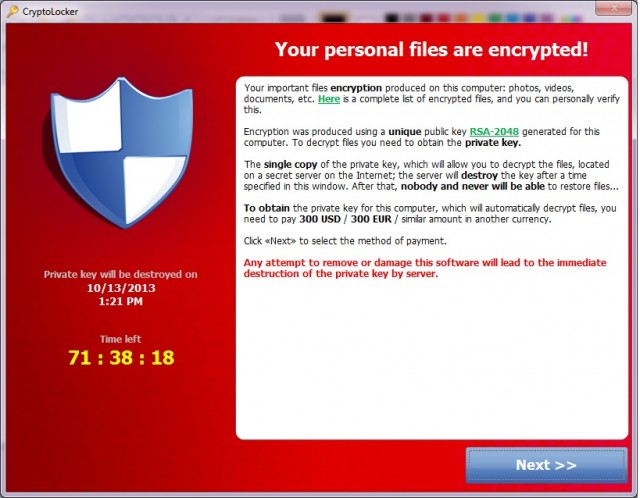

According to multiple participants in the month-long discussion, CryptoLocker is true to its name. It uses strong cryptography to lock all files that a user has permission to modify, including those on secondary hard drives and network storage systems. Until recently, few antivirus products detected the ransomware until it was too late. By then, victims were presented with a screen like the one displayed on the computer of the accounting employee, which is pictured above. It warns that the files are locked using a 2048-bit version of the RSA cryptographic algorithm and that the data will be forever lost unless the private key is obtained from the malware operators within three days of the infection.

[...]

"The server will destroy the key after a time specified in this window," the screen warns, displaying a clock that starts with 72:00:00 and counts down with each passing second. "After that, nobody and never will be able to restore files. To obtain the private key for this computer, which will automatically decrypt files, you need to pay 300 USD / 300 EUR / similar amount in another currency."

My observation on this matter is this: someplace, somewhere, I think that Neal Stephenson must be laughing right now.

14 October 2013

Fun With Real-World Network Problems

A long time ago, on a ${Dayjob} far, far away, somebody from Support found me in the lunch-room and said "Kevin, can you give me a hand? I'm having trouble with a Customer-site and I can't figure out what is going wrong". "Okay", I said, as we walked back to his desk.

As we walked back to his desk, the Support guy warned me "look, this Customer is not going to be easy to deal with. His network is down ; people are yelling at him, and so he's yelling at us". OK, I can deal with this...

So, I sat down in front of the Support guy's computer, which already had a desktop/VPN sharing session setup to the remote customer site. The Customer (Site Administrator) was soon on the speakerphone, and we were soon in the thick of the problem.

The first thing I said was "Look, let me try to get up to speed here. What is going wrong?". OK, so I learned that their DHCP server was only working for some of this site's end-users. It seemed that every user who wasn't able to get a DHCP address was calling the site's help-desk.

The Site Administrator was...upset...and he even reminded us that everything should be working just fine -- after all -- our company had just replaced his old DHCP server with a brand-new and rather expensive new server one month ago. From his perspective, it simply was not acceptable that this new piece of hardware was not performing flawlessly....

So, I started to get the Site Administrator to run some basic diagnostics. Was the DHCP server configured correctly? Was it running? Were the disk drives acting reasonably? Etc. Everything checked out just fine.

Many users at this site continued to not be able to get onto the network....

At this time, I was out of simple diagnostics to run. I decided that I really wanted to see a network capture of the DHCP traffic. I asked the Site Administrator to run the network trace. He did this, not quite in the manner that I was hoping for, but now I was starting to see more information.

With the trace enabled, I was now starting to see the DHCP server perform its work, on the wire. The challenging thing for me at this point was that the network trace that the Site Administrator was running showed me a high-level view of ALL of the traffic that was hitting the system's DHCP server. At least 75% of this traffic related to DHCP interactions in which the client was successfully able to obtain a DCHP address....and picking out failing sessions was like picking out a needle in a haystack that was getting tossed around by a tornado. After a few tense minutes of watching traffic like this (sprinkled with shouts from the Site Administrator of "there's another failure right there!!!"), I finally was able to get the Site Administrator to send one of his techs out to the location of the problem (wireless) network so I would have a known client to look at. Once the tech was at the network location with his test MacBook, I obtained the MAC address of the test MacBook's wireless interface. Then I had the Site Admin adjust the network capture so that we could narrow the capture to only capture the DHCP traffic from the tech's MacBook.

Despite the fact that we were now looking at a much smaller subset of the network's total traffic, it still wasn't obvious what was going wrong. The DHCP traffic from the failing MacBook seemed....strangely slow....and the DHCP negotiation wasn't working correctly....but the problem wasn't immediately obvious. The Site Admin was becoming more and more unhappy.

Many users at this site continued to not be able to get onto the network....

I was nearly out of ideas. The trace that I was running wasn't showing any obvious problems. Finally, I told the Site Admin "look, can we please run a trace in the manner that I originally wanted, which would be a complete network capture in which I can look at ALL of the traffic, including all of the headers and complete contents of all of the packets?". After a few minutes of back and forth, I eventually got this capture started (capturing traffic as seen by the DHCP server), and then we got the tech with the MacBook to run one more DHCP negotiation. Then I got the Site Admin to send me the complete network capture. I ran back to my desk and opened the capture in Wireshark.

Now that I was back at my desk and I was able to think more clearly, I was hoping that I would somehow be able to employ the Feynman Problem Solving Algorithm to identify where the problem was. Unfortunately, my brain was unable to successfully implement this algorithm.

However.....I did notice one strange thing in the trace. I noticed that all of the DHCP traffic from the failing DHCP client wasn't destined for a broadcast address. Instead it was destined for the specific IP address of the shiny new DHCP server running on the brand new appliance at the customer site. Also, since the DHCP requests were destined for a specific IP address, the destination MAC address in the Ethernet headers was also set to a unicast MAC address. Furthermore, I noticed that at some point early on the DHCP negotiation, that the DHCP client was actually issuing an ARP-request with the IP address of the DHCP server inside....somehow indicating that the DHCP client somehow simultaneously DID and DID NOT know the MAC address of the DHCP server. I didn't know what to make of this....so I wandered back to the guy in Support's desk, where we still had a speakerphone connection with the Site Admin of the failing site. I verified that the destination IP address that I had seen was correct....and then I tried to verify the destination MAC address that I had seen in the packet trace.

Here is where we were able to start dragging The Real Problem out into the light of day: the MAC addresses didn't match! In fact, we soon established that:

I explained this situation to to the Site Administrator and made the following fact very clear: the appliance that we were all trying to fix at the customer site was simply NOT GOING TO RESPOND to traffic that arrived at its NIC with a destination MAC address that wasn't either a broadcast address or an address that matched its own MAC address. Then I told the Site Admin "unfortunately, I really haven't thought of a reason why this would be occurring on your network yet....I'm not sure where this phantom MAC address is coming from....". Upon hearing these new facts, the Site Admin told us "hold on a sec..." and then he said "I'll call you back soon". Click....

I went back to my desk to puzzle over the network traces some more. I really had no good way to explain this traffic that I was seeing at this site.

Soon after, I got my explanation. The Site Admin sent us an email that read:

At this point I asked the Site Admin via email "say, that destination unicast MAC address we observed in all of the failing DHCP negotiations and in those ARP-requests -- did that MAC address belong to one if the NICs on the appliance that we replaced at your site around a month ago? The MAC address seems like it would match the NIC vendor that I believe would go along with that vintage of old hardware"...

I never got a response to my query, but I am 100% certain that the answer to my question was "yes".

Problem solved. The root cause of this site's entire problem here was not the DHCP server itself, but rather how the site's specific wireless configuration was setup to deal with one (and only one unique) DHCP server.

Obviously, somebody at this site had previously attempted to setup their wireless network in some sort of an optimal way (I can see the rationale here), but, of course, this "optimal configuration" fell down the second that a new appliance was setup at this site....a new appliance with new/different MAC addresses. I learn something new every day!

[certain details of this story are a tiny bit cloudy in my mind, but I'm fairly sure that I have most of the details right. Unfortunately, the network captures that I ran on this day are long gone....]

As we walked back to his desk, the Support guy warned me "look, this Customer is not going to be easy to deal with. His network is down ; people are yelling at him, and so he's yelling at us". OK, I can deal with this...

So, I sat down in front of the Support guy's computer, which already had a desktop/VPN sharing session setup to the remote customer site. The Customer (Site Administrator) was soon on the speakerphone, and we were soon in the thick of the problem.

The first thing I said was "Look, let me try to get up to speed here. What is going wrong?". OK, so I learned that their DHCP server was only working for some of this site's end-users. It seemed that every user who wasn't able to get a DHCP address was calling the site's help-desk.

The Site Administrator was...upset...and he even reminded us that everything should be working just fine -- after all -- our company had just replaced his old DHCP server with a brand-new and rather expensive new server one month ago. From his perspective, it simply was not acceptable that this new piece of hardware was not performing flawlessly....

So, I started to get the Site Administrator to run some basic diagnostics. Was the DHCP server configured correctly? Was it running? Were the disk drives acting reasonably? Etc. Everything checked out just fine.

Many users at this site continued to not be able to get onto the network....

At this time, I was out of simple diagnostics to run. I decided that I really wanted to see a network capture of the DHCP traffic. I asked the Site Administrator to run the network trace. He did this, not quite in the manner that I was hoping for, but now I was starting to see more information.

With the trace enabled, I was now starting to see the DHCP server perform its work, on the wire. The challenging thing for me at this point was that the network trace that the Site Administrator was running showed me a high-level view of ALL of the traffic that was hitting the system's DHCP server. At least 75% of this traffic related to DHCP interactions in which the client was successfully able to obtain a DCHP address....and picking out failing sessions was like picking out a needle in a haystack that was getting tossed around by a tornado. After a few tense minutes of watching traffic like this (sprinkled with shouts from the Site Administrator of "there's another failure right there!!!"), I finally was able to get the Site Administrator to send one of his techs out to the location of the problem (wireless) network so I would have a known client to look at. Once the tech was at the network location with his test MacBook, I obtained the MAC address of the test MacBook's wireless interface. Then I had the Site Admin adjust the network capture so that we could narrow the capture to only capture the DHCP traffic from the tech's MacBook.

Despite the fact that we were now looking at a much smaller subset of the network's total traffic, it still wasn't obvious what was going wrong. The DHCP traffic from the failing MacBook seemed....strangely slow....and the DHCP negotiation wasn't working correctly....but the problem wasn't immediately obvious. The Site Admin was becoming more and more unhappy.

Many users at this site continued to not be able to get onto the network....

I was nearly out of ideas. The trace that I was running wasn't showing any obvious problems. Finally, I told the Site Admin "look, can we please run a trace in the manner that I originally wanted, which would be a complete network capture in which I can look at ALL of the traffic, including all of the headers and complete contents of all of the packets?". After a few minutes of back and forth, I eventually got this capture started (capturing traffic as seen by the DHCP server), and then we got the tech with the MacBook to run one more DHCP negotiation. Then I got the Site Admin to send me the complete network capture. I ran back to my desk and opened the capture in Wireshark.

Now that I was back at my desk and I was able to think more clearly, I was hoping that I would somehow be able to employ the Feynman Problem Solving Algorithm to identify where the problem was. Unfortunately, my brain was unable to successfully implement this algorithm.

However.....I did notice one strange thing in the trace. I noticed that all of the DHCP traffic from the failing DHCP client wasn't destined for a broadcast address. Instead it was destined for the specific IP address of the shiny new DHCP server running on the brand new appliance at the customer site. Also, since the DHCP requests were destined for a specific IP address, the destination MAC address in the Ethernet headers was also set to a unicast MAC address. Furthermore, I noticed that at some point early on the DHCP negotiation, that the DHCP client was actually issuing an ARP-request with the IP address of the DHCP server inside....somehow indicating that the DHCP client somehow simultaneously DID and DID NOT know the MAC address of the DHCP server. I didn't know what to make of this....so I wandered back to the guy in Support's desk, where we still had a speakerphone connection with the Site Admin of the failing site. I verified that the destination IP address that I had seen was correct....and then I tried to verify the destination MAC address that I had seen in the packet trace.

Here is where we were able to start dragging The Real Problem out into the light of day: the MAC addresses didn't match! In fact, we soon established that:

- there were no DHCP-helpers on this network -- it was a big but simple network.

- all of the failing DHCP clients were wireless clients, but not all wireless clients were failing -- many were working just fine.

- all of the failing DHCP clients happened to be sending their DHCP protocol traffic to a specific (correct) IP destination address but an incorrect unicast MAC address.

- all of the successful DHCP clients (both wired and wireless) were interacting with the DHCP server via an IP-broadcast/MAC-broadcast addresses (the way that things usually go).

- ....and there was this weird ARP issue too: I wondered aloud "how is the tech's MacBook knowledgeable enough to send out an Ethernet frame with the destination MAC address correctly filled in with some (wrong) unicast MAC address, but then a few milliseconds later it seems to be in a state where it doesn't have this unicast entry in its ARP cache, because it is sending out an ARP-request for the DHCP server's IP address?".

I explained this situation to to the Site Administrator and made the following fact very clear: the appliance that we were all trying to fix at the customer site was simply NOT GOING TO RESPOND to traffic that arrived at its NIC with a destination MAC address that wasn't either a broadcast address or an address that matched its own MAC address. Then I told the Site Admin "unfortunately, I really haven't thought of a reason why this would be occurring on your network yet....I'm not sure where this phantom MAC address is coming from....". Upon hearing these new facts, the Site Admin told us "hold on a sec..." and then he said "I'll call you back soon". Click....

I went back to my desk to puzzle over the network traces some more. I really had no good way to explain this traffic that I was seeing at this site.

Soon after, I got my explanation. The Site Admin sent us an email that read:

I believe we've found part of the problem. There are two settings on ${famous_networking_vendor's} wireless controllers: "Broadcast Filter ARP" and "Convert Broadcast ARP Requests to Unicast". Together they do what it implies, convert Broadcast ARP requests to unicast traffic over wireless, reducing the amount of broadcast traffic. This setting has been enabled for years with no issues. The problem seems to have started after we upgraded our hardware. If I disable this feature on ${famous_network_vendor's} wireless controller then the ARP process works normally and the client doesn't lose connectivity to it.

At this point I asked the Site Admin via email "say, that destination unicast MAC address we observed in all of the failing DHCP negotiations and in those ARP-requests -- did that MAC address belong to one if the NICs on the appliance that we replaced at your site around a month ago? The MAC address seems like it would match the NIC vendor that I believe would go along with that vintage of old hardware"...

I never got a response to my query, but I am 100% certain that the answer to my question was "yes".

Problem solved. The root cause of this site's entire problem here was not the DHCP server itself, but rather how the site's specific wireless configuration was setup to deal with one (and only one unique) DHCP server.

Obviously, somebody at this site had previously attempted to setup their wireless network in some sort of an optimal way (I can see the rationale here), but, of course, this "optimal configuration" fell down the second that a new appliance was setup at this site....a new appliance with new/different MAC addresses. I learn something new every day!

[certain details of this story are a tiny bit cloudy in my mind, but I'm fairly sure that I have most of the details right. Unfortunately, the network captures that I ran on this day are long gone....]

10 September 2013

Real-life conversation I had recently...

Person: What is the deal with this....."shibboleth"....issue? I'm not even sure how to pronounce this.

Me: It is extremely ironic that you just said that.

Person: What? What does it mean?

Me: I think that you'd get a better explanation if you looked it up for yourself.

Person: Whatever... It probably doesn't matter how we pronounce it anyways.

Me: For some people, it was a matter of life-or-death.

Person: Huh?

Me: Trust me: look it up.

Me: It is extremely ironic that you just said that.

Person: What? What does it mean?

Me: I think that you'd get a better explanation if you looked it up for yourself.

Person: Whatever... It probably doesn't matter how we pronounce it anyways.

Me: For some people, it was a matter of life-or-death.

Person: Huh?

Me: Trust me: look it up.

02 September 2013

Gonzo sed code

In the last few weeks the juxtaposition of two things has made me laugh a bit.

First, there is this quote from Neal Stephenson's REAMDE:

Soon after I read this I encountered SedChess, which is a wickedly awesome implementation of chess implemented in sed. This is a fabulous hack, somewhat akin to producing fine carpentry with a stapler! I was totally unsurprised when I first saw this hack on github that all of the comments were written in Russian.

The last fabulous hack I can recall in sed was dc.sed, which was a nearly complete implementation of "dc".

I've written some gonzo code in Perl....but I bow to people who have the patience and skillz to write gonzo code in sed....

First, there is this quote from Neal Stephenson's REAMDE:

Like any Russian, Sokolov enjoyed a game of chess. At some level, he was never not playing it! Every morning he woke up and looked at the tiles on the ceiling of the office that was his bedroom and reviewed the positions of all of the pieces and thought about all of the moves that they might make today, what countermoves he would have to make to maximize his chances of survival.

Soon after I read this I encountered SedChess, which is a wickedly awesome implementation of chess implemented in sed. This is a fabulous hack, somewhat akin to producing fine carpentry with a stapler! I was totally unsurprised when I first saw this hack on github that all of the comments were written in Russian.

The last fabulous hack I can recall in sed was dc.sed, which was a nearly complete implementation of "dc".

I've written some gonzo code in Perl....but I bow to people who have the patience and skillz to write gonzo code in sed....

26 August 2013

29 July 2013

Mind the Gaps!

We had a nice ride yesterday. There were "only" two hills too!

It is so nice to ride with a great group of friends!

It is so nice to ride with a great group of friends!

19 July 2013

18 June 2013

11 June 2013

Raid Rockingham 2013

It was a nice ride. I experienced one flat, but I rode with a great bunch of people.

09 June 2013

The Death of a Hard-Drive

Have you ever wanted to see a hard-drive die on a production system? Yeah, me neither...

The other day I was called upon to try to figure out what was going wrong on a heavily-utilized production system. I dimly recalled looking at this system several months ago. From my notes, I recalled that the system was acting a bit sluggishly back then. I even made a few performance improvements at the time.

Fast forward to this past week... The performance improvements that I made months ago helped get the system through a critical period, but now the system was acting sluggishly again. So, I analyzed the logs. There, in the logs, was a periodic warning that the hard-drives were acting a little bit flaky. I do not have any sort of physical access to this system ; the logs are all I have. The problem is that this periodic warning seems to have been occurring for a long time now, and there seems to be some resistance to replacing the hardware in this system. So, I really had to make some strong case to prove that this system was taking a nosedive and needed immediate action.

I decided to create a graph that shows the frequency of this system's warning message over time. Here is my graph:

Every point on this graph means "something bad happened". And...I think it is clear that this graph shows that "a whole lot of new badness is happening"....and that this system needs some serious fixing.

I'm crossing my fingers right now...

The other day I was called upon to try to figure out what was going wrong on a heavily-utilized production system. I dimly recalled looking at this system several months ago. From my notes, I recalled that the system was acting a bit sluggishly back then. I even made a few performance improvements at the time.

Fast forward to this past week... The performance improvements that I made months ago helped get the system through a critical period, but now the system was acting sluggishly again. So, I analyzed the logs. There, in the logs, was a periodic warning that the hard-drives were acting a little bit flaky. I do not have any sort of physical access to this system ; the logs are all I have. The problem is that this periodic warning seems to have been occurring for a long time now, and there seems to be some resistance to replacing the hardware in this system. So, I really had to make some strong case to prove that this system was taking a nosedive and needed immediate action.

I decided to create a graph that shows the frequency of this system's warning message over time. Here is my graph:

Every point on this graph means "something bad happened". And...I think it is clear that this graph shows that "a whole lot of new badness is happening"....and that this system needs some serious fixing.

I'm crossing my fingers right now...

05 May 2013

I (don't) thrive on conflict

(picture borrowed from Ximbot)

I don't thrive on conflict....especially when wrangling a merge involving hundreds of files and many hundreds of merge conflicts.....but...when I find myself dealing with such a situation, I love....love....love using Emacs/Ediff and most especially M-x vc-resolve-conflicts.

The only other "tools" I recommend for wrangling a large merge are a good night's sleep and lots of coffee.

18 April 2013

Traffic Synchronization

Interesting article in the NYT the other day: To Fight Gridlock, Los Angeles Synchronizes Every Red Light:

I used to work for a startup that came up with a product very similar to what is described here, except that this product was a computer networking system. We handled various Ethernet and T1 interfaces, and, the special-sauce behind our product was that our product synchronized network traffic that required Quality of Service (QoS). I was the architect of the signaling protocol that facilitated all of this (and I contributed in many other ways too).

This was an interesting system. Parts of the system used a hard-real-time OS, parts of the system involved a {thing} that looked a lot like an enterprise-class softswitch. Not a day went by at this job in which I wasn't working on some interesting problem.

Our system worked really well too: we could turn the jitter buffers essentially "off" in the various video/audio codecs that were on the endpoints that we used, and we could see very clearly that our system never dropped any packets.....even at nearly 100% load and wire-speed.

Unfortunately, we were a little bit before our time, and the market for this sort-of "near-perfect QoS" gear never really took off.

Still, when somebody like Bob Metcalf strolls through your company's lab and says that the technology that you've been working on is "really, really cool", that makes for a good memory.

There is never a dull moment in high-tech.

Now, in the latest ambitious and costly assault on gridlock, Los Angeles has synchronized every one of its 4,500 traffic signals across 469 square miles — the first major metropolis in the world to do so, officials said — raising the almost fantastical prospect, in theory, of driving Western Avenue from the Hollywood Hills to the San Pedro waterfront without stopping once.[....]The system uses magnetic sensors in the road that measure the flow of traffic, hundreds of cameras and a centralized computer system that makes constant adjustments to keep cars moving as smoothly as possible. The city’s Transportation Department says the average speed of traffic across the city is 16 percent faster under the system, with delays at major intersections down 12 percent.Without synchronization, it takes an average of 20 minutes to drive five miles on Los Angeles streets; with synchronization, it has fallen to 17.2 minutes, the city says. And the average speed on the city’s streets is now 17.3 miles per hour, up from 15 m.p.h. without synchronized lights.

I used to work for a startup that came up with a product very similar to what is described here, except that this product was a computer networking system. We handled various Ethernet and T1 interfaces, and, the special-sauce behind our product was that our product synchronized network traffic that required Quality of Service (QoS). I was the architect of the signaling protocol that facilitated all of this (and I contributed in many other ways too).

This was an interesting system. Parts of the system used a hard-real-time OS, parts of the system involved a {thing} that looked a lot like an enterprise-class softswitch. Not a day went by at this job in which I wasn't working on some interesting problem.

Our system worked really well too: we could turn the jitter buffers essentially "off" in the various video/audio codecs that were on the endpoints that we used, and we could see very clearly that our system never dropped any packets.....even at nearly 100% load and wire-speed.

Unfortunately, we were a little bit before our time, and the market for this sort-of "near-perfect QoS" gear never really took off.

Still, when somebody like Bob Metcalf strolls through your company's lab and says that the technology that you've been working on is "really, really cool", that makes for a good memory.

There is never a dull moment in high-tech.

13 March 2013

Bring Back Google Reader

My sentiments exactly:

http://bringgooglereaderback.com/

{sigh}

In the past few months, my favorite replacement for iGoogle has turned out to be igHome. I guess I'll have to find a replacement for Google Reader now too.

Bleh.

http://bringgooglereaderback.com/

{sigh}

In the past few months, my favorite replacement for iGoogle has turned out to be igHome. I guess I'll have to find a replacement for Google Reader now too.

Bleh.

22 February 2013

Retransmission Dampening

A certain network protocol that I work with can spew a lot of traffic. Staggering amounts, actually.

I only have limited control over this traffic. It just shows up (sometimes as if fired by a Gatling gun), and code that I help maintain on a certain server has to deal with it. When the traffic isn't handled in a timely manner, people get upset and the phone starts ringing.

Recently, after witnessing our server starting to have difficulty handling a certain burst in traffic, I started to think to myself "how are we going to improve?". I came up with a variety of ideas.

One of my ideas went as follows:

It is computationally non-trivial to unpack everything in every PDU that arrives at the server. Under normal operations, everything is fine, and there are plenty of CPU resources for this sort of thing. However, during heavy traffic loads, my data suggests that unpacking all of these PDUs is something that I need to pay attention to.

And....one of the problems is that the {things} that are sending our server all of these PDUs almost seem to be impatient. Some of these {things} seem to operate in the following way:

But...when I look at the guts of this protocol, I believe that I see a non-trivial (but not difficult either) way of limiting these re-sent packets. The code that I have in mind won't actually have to entirely unpack all of the PDUs in order to figure out which packets are re-sent packets and which packets are valid new requests.

Here is the thing that I have in mind:

However, before I go off an write some code to implement this, I decided that I wanted to run a simulation using some Real World data to see how much of an effect on overall traffic this scheme would have if the system was running with a {1, 2, 3} second minimum.

So, I threw together a simulator, and here are my results:

So, um, yeah....obviously I'm going to write some code soon to implement this. Of course, I'll make my code tunable, but I'm pretty sure that the default will be to enforce a minimum 1-second retransmission minimum. This should be both a fun project, as well as something that will really help the server that I help maintain!

I only have limited control over this traffic. It just shows up (sometimes as if fired by a Gatling gun), and code that I help maintain on a certain server has to deal with it. When the traffic isn't handled in a timely manner, people get upset and the phone starts ringing.

Recently, after witnessing our server starting to have difficulty handling a certain burst in traffic, I started to think to myself "how are we going to improve?". I came up with a variety of ideas.

One of my ideas went as follows:

It is computationally non-trivial to unpack everything in every PDU that arrives at the server. Under normal operations, everything is fine, and there are plenty of CPU resources for this sort of thing. However, during heavy traffic loads, my data suggests that unpacking all of these PDUs is something that I need to pay attention to.

And....one of the problems is that the {things} that are sending our server all of these PDUs almost seem to be impatient. Some of these {things} seem to operate in the following way:

- Send a PDU with a request in it

- Wait an infinitesimal amount of time

- Have you received a response yet? If "yes", we are done! However, if "no" then goto step 1.

But...when I look at the guts of this protocol, I believe that I see a non-trivial (but not difficult either) way of limiting these re-sent packets. The code that I have in mind won't actually have to entirely unpack all of the PDUs in order to figure out which packets are re-sent packets and which packets are valid new requests.

Here is the thing that I have in mind:

- Under normal operations, everything works as it usually does.

- A "retransmission dampening filter" (RDF) will always be running.

- The RDF will let new PDUs into the system with no restrictions.

- In a CPU-efficient way, the RDF will be able to detect PDUs that are retransmissions. If a PDU has been re-transmitted by the network {thing} without having waited {N} milliseconds, then the packet will be dropped. This will spare the rest of the system from having to unpack the entire PDU, which, again, takes some CPU time.

So, I threw together a simulator, and here are my results:

So, um, yeah....obviously I'm going to write some code soon to implement this. Of course, I'll make my code tunable, but I'm pretty sure that the default will be to enforce a minimum 1-second retransmission minimum. This should be both a fun project, as well as something that will really help the server that I help maintain!

21 February 2013

18 February 2013

08 February 2013

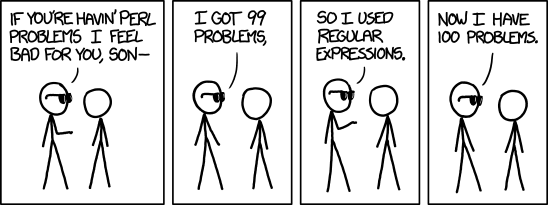

Pondering the different reactions from the two /^J.*Z.*?$/s

http://xkcd.com/1171/

Why do I get the feeling that jwz is laughing right now?

Also, why do I get the feeling that sometime today, somebody might try to explain to Jay-Z a certain technical joke -- maybe he'll smile at the joke -- and then he'll get back to making another million dollars...

03 February 2013

Praise for Tomas Holmstrom

Like every other hockey fan, I'm pretty irked with the NHL right now (thanks for the lockout, idiots....). But, this doesn't prevent me from admiring the truly remarkable career of Tomas Holmstrom, who retired recently.

Take a look at some highlights:

I think that every goalie in the NHL was glad to see Holmstrom go -- he drove them CRAZY. He scored most of his goals 3-4 feet from the net -- most of these were redirections and "garbage goals". He made a fine art out of these goals, but of course he had to work really hard and take a TON of abuse for each one.

Can you imagine having a job in which it is your task to stand in front of the goalie, trying to obscure his vision while a giant defenseman tries to move you out of the way? Oh, and then, what happens next is that some guy shoots a small hard rubber puck in your general direction at around 100mph....and while the defenseman is seriously abusing you, it is now your job to "tip" the puck with your stick, hoping to direct the puck into the net. This is what Holmstrom made into art.

I'm a hockey fan, and I cheer for the players more than I do for the teams. I'll miss seeing Homer out on the ice.

Take a look at some highlights:

I think that every goalie in the NHL was glad to see Holmstrom go -- he drove them CRAZY. He scored most of his goals 3-4 feet from the net -- most of these were redirections and "garbage goals". He made a fine art out of these goals, but of course he had to work really hard and take a TON of abuse for each one.

Can you imagine having a job in which it is your task to stand in front of the goalie, trying to obscure his vision while a giant defenseman tries to move you out of the way? Oh, and then, what happens next is that some guy shoots a small hard rubber puck in your general direction at around 100mph....and while the defenseman is seriously abusing you, it is now your job to "tip" the puck with your stick, hoping to direct the puck into the net. This is what Holmstrom made into art.

I'm a hockey fan, and I cheer for the players more than I do for the teams. I'll miss seeing Homer out on the ice.

01 February 2013

Lazy Dung Beetles

The other night as I was driving home, a story about the remarkable navigation skills of dung beetles was playing on the radio:

I really don't know why, but when Professor Warrant, using his very Australian accent, got to the part about "lazy dung beetles", I sort-of lost it. I laughed a lot as I listened to this story.

We live in a great world in which folks like Professor Warrant can study the lowly dung beetle, and can make interesting discoveries about their remarkable skills.

WARRANT: They have to get away from the pile of dung as fast as they can and as efficiently as they can because the dung pile is a very, very competitive place with lots and lots of beetles all competing for the same dung. And there's very many lazy beetles that are just waiting around to steal the balls of other industrious beetles and often there are big fights in the dung piles.

I really don't know why, but when Professor Warrant, using his very Australian accent, got to the part about "lazy dung beetles", I sort-of lost it. I laughed a lot as I listened to this story.

We live in a great world in which folks like Professor Warrant can study the lowly dung beetle, and can make interesting discoveries about their remarkable skills.

Subscribe to:

Comments (Atom)