It was a nice ride, but no KOM points for me...I'm just glad that I felt strong the whole way.

Thanks for the great ride T.!

07 May 2010

06 May 2010

More fun with network graphs

The work that I have done with analyzing a particular kind of network traffic has continued to bear fruit. For reference, please see my earlier posting "Updated graph from silly network attack".

A couple of weeks ago I was dealing with a different ${organization} than I was describing in my previous posts. They have a totally different network setup at this new site, and, I would soon learn, a totally different scale of traffic.

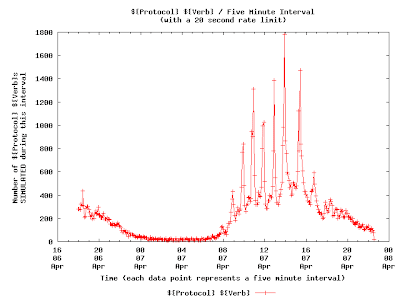

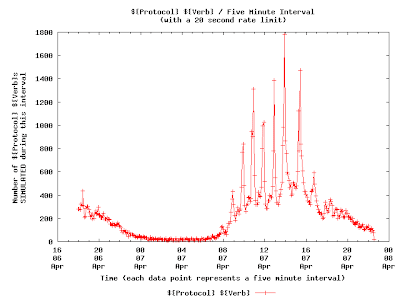

Here is a graph generated from the data that I collected at this site (same protocol as before):

If you compare this graph to the previous ${organization}'s graph, it is easy to see that this new ${organization} has a completely different scale of traffic. In fact, my data-collection scripts collected so much information in 24 hours at this site that it took some of my post-processing scripts over 20 hours to wade through all of the data!

If you compare this graph to the previous ${organization}'s graph, it is easy to see that this new ${organization} has a completely different scale of traffic. In fact, my data-collection scripts collected so much information in 24 hours at this site that it took some of my post-processing scripts over 20 hours to wade through all of the data!

The thing about this graph is that (unfortunately) there is no obvious spike in traffic that we can focus on to find a problem on the network. The graph does show one thing pretty clearly though: there is a LOT of this type of traffic on this network (especially during daytime hours).

The thing is, it is my position that there is TOO MUCH of this type of traffic on this network. So, in an effort to try to justify my position, I decided to look more deeply into the data. Luckily, this was pretty easy to do, since my post-processing tools also generate a sorted list of how much of this traffic each node on the network generates. With this information, I was able to quickly find some nodes on the network that were responsible for a huge amount of this traffic.

In fact, after looking at the data for a while, I learned that the node on the network that generated the most traffic (by far) was transmitting this traffic using the following pattern:

The thing is, if you know anything about this ${Protocol}, it is unreasonable for a single node on the network to be transmitting more than one of these ${Verbs} onto the network every, oh.... 10 seconds (and, if everything works out, the node should STOP transmitting this traffic at this point...). It could be argued that 20 seconds might be an even more reasonable value in this situation. At transmission rates higher than this, this node is basically causing a denial-of-service attack, no matter what its intentions are. This is simply an unreasonable amount of traffic for one node to generate.

It turns out that there is a straightforward way to rate-limit all of this traffic on this network. I don't control this ${organization}'s network, but I thought that before I recommended this configuration to this ${organization} that I'd try to model what it would look like if this rate-limit were put into effect.

The thing is, it turns out that we can model this configuration easily enough. All I had to do to model this configuration change was to modify my post-processing scripts to simulate the rate-limit that I was proposing. Then I just took this modified post-processing script and sent all of the original data that I collected at this site through this. This gives us a very good idea of what effect a per-node 10 second rate limit would have on this network's ${Protocol} traffic.

Here is a graph that shows the overall effect of a 10 second rate-limit:

Here is a graph that shows the overall effect of a 20 second rate limit:

Again, putting some sort of rate-limit rule into effect on this network shouldn't be controversial -- really, there is no reason for any node on the network to be hammering the network with this type of traffic. Discussions are ongoing with ${organization} as to the details of this rate-limit rule.

I'm just happy that I could analyze this data, point out some obvious problems, suggest a solution, and provide a way of simulating the results of my suggestion. I am optimistic that my rate-limiting scheme will go a long way towards making this ${organization}'s network a lot more usable and responsive.

A couple of weeks ago I was dealing with a different ${organization} than I was describing in my previous posts. They have a totally different network setup at this new site, and, I would soon learn, a totally different scale of traffic.

Here is a graph generated from the data that I collected at this site (same protocol as before):

If you compare this graph to the previous ${organization}'s graph, it is easy to see that this new ${organization} has a completely different scale of traffic. In fact, my data-collection scripts collected so much information in 24 hours at this site that it took some of my post-processing scripts over 20 hours to wade through all of the data!

If you compare this graph to the previous ${organization}'s graph, it is easy to see that this new ${organization} has a completely different scale of traffic. In fact, my data-collection scripts collected so much information in 24 hours at this site that it took some of my post-processing scripts over 20 hours to wade through all of the data!The thing about this graph is that (unfortunately) there is no obvious spike in traffic that we can focus on to find a problem on the network. The graph does show one thing pretty clearly though: there is a LOT of this type of traffic on this network (especially during daytime hours).

The thing is, it is my position that there is TOO MUCH of this type of traffic on this network. So, in an effort to try to justify my position, I decided to look more deeply into the data. Luckily, this was pretty easy to do, since my post-processing tools also generate a sorted list of how much of this traffic each node on the network generates. With this information, I was able to quickly find some nodes on the network that were responsible for a huge amount of this traffic.

In fact, after looking at the data for a while, I learned that the node on the network that generated the most traffic (by far) was transmitting this traffic using the following pattern:

top_of_loop:

do this 8 times

send this ${Protocol} ${Verb}

wait 10ms

done

sleep 6 seconds

goto top_of_loop;

The thing is, if you know anything about this ${Protocol}, it is unreasonable for a single node on the network to be transmitting more than one of these ${Verbs} onto the network every, oh.... 10 seconds (and, if everything works out, the node should STOP transmitting this traffic at this point...). It could be argued that 20 seconds might be an even more reasonable value in this situation. At transmission rates higher than this, this node is basically causing a denial-of-service attack, no matter what its intentions are. This is simply an unreasonable amount of traffic for one node to generate.

It turns out that there is a straightforward way to rate-limit all of this traffic on this network. I don't control this ${organization}'s network, but I thought that before I recommended this configuration to this ${organization} that I'd try to model what it would look like if this rate-limit were put into effect.

The thing is, it turns out that we can model this configuration easily enough. All I had to do to model this configuration change was to modify my post-processing scripts to simulate the rate-limit that I was proposing. Then I just took this modified post-processing script and sent all of the original data that I collected at this site through this. This gives us a very good idea of what effect a per-node 10 second rate limit would have on this network's ${Protocol} traffic.

Here is a graph that shows the overall effect of a 10 second rate-limit:

Here is a graph that shows the overall effect of a 20 second rate limit:

Again, putting some sort of rate-limit rule into effect on this network shouldn't be controversial -- really, there is no reason for any node on the network to be hammering the network with this type of traffic. Discussions are ongoing with ${organization} as to the details of this rate-limit rule.

I'm just happy that I could analyze this data, point out some obvious problems, suggest a solution, and provide a way of simulating the results of my suggestion. I am optimistic that my rate-limiting scheme will go a long way towards making this ${organization}'s network a lot more usable and responsive.

Subscribe to:

Posts (Atom)